Social-Media

Facebook will start to access some public group conversations in People's News Feeds and Search Results

Facebook is expanding the reach of online forums today with innovative innovations that could lead to more users participating in community conversations, but also potentially more exposure for harmful or sinister cultures. Today , the organization unveiled a range of changes for groups that include automatic filtering and people's news streams for community conversations.

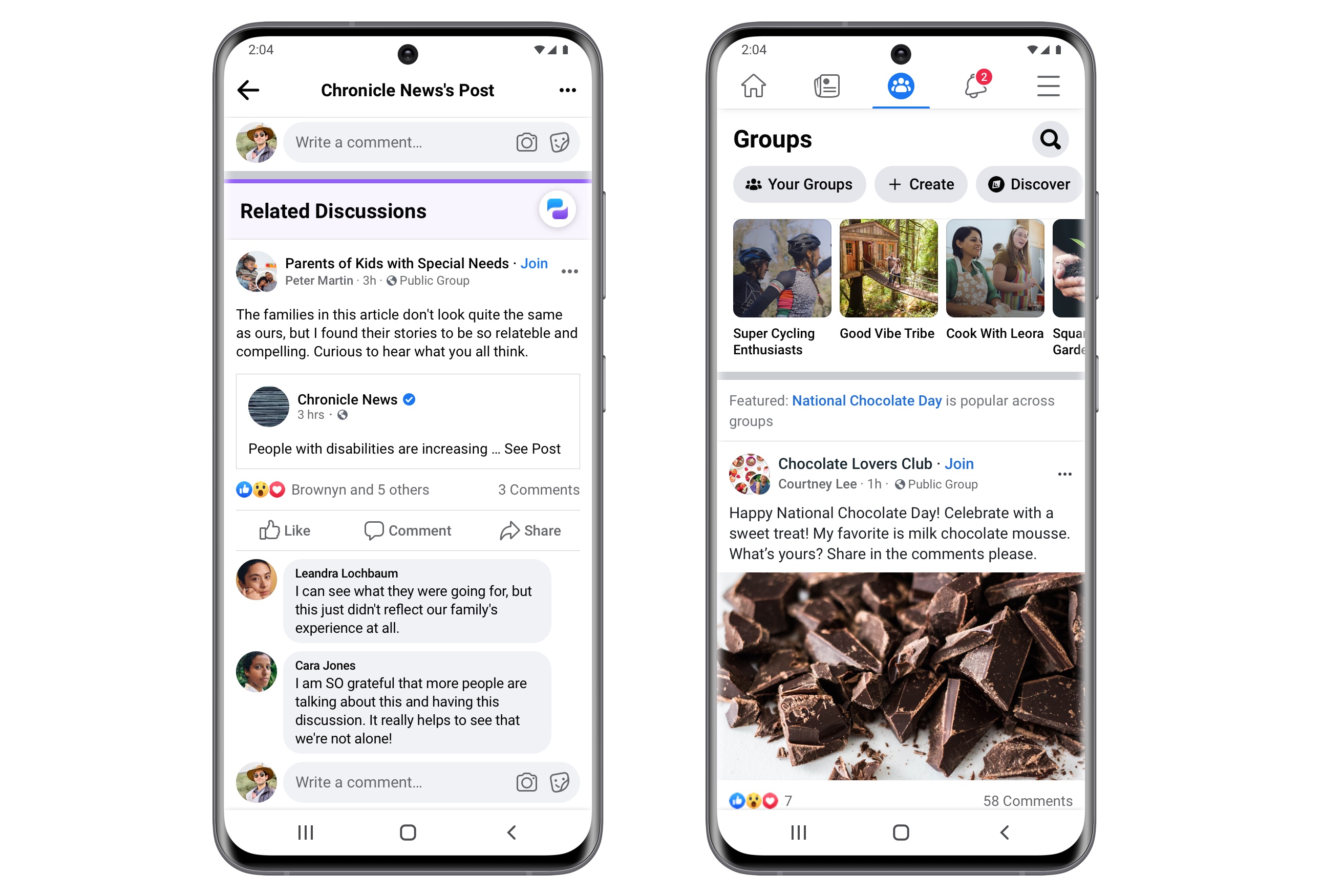

The most fascinating update is to launch the test at first. Facebook says it's going to launch online community debates in People's News Feeds. They can show up if anyone shares a connection or reshares a message. Below the page, users will be able to click to see the related conversations taking place on the same article or page in public Facebook groups. The initial poster will then enter the conversation without joining the party.

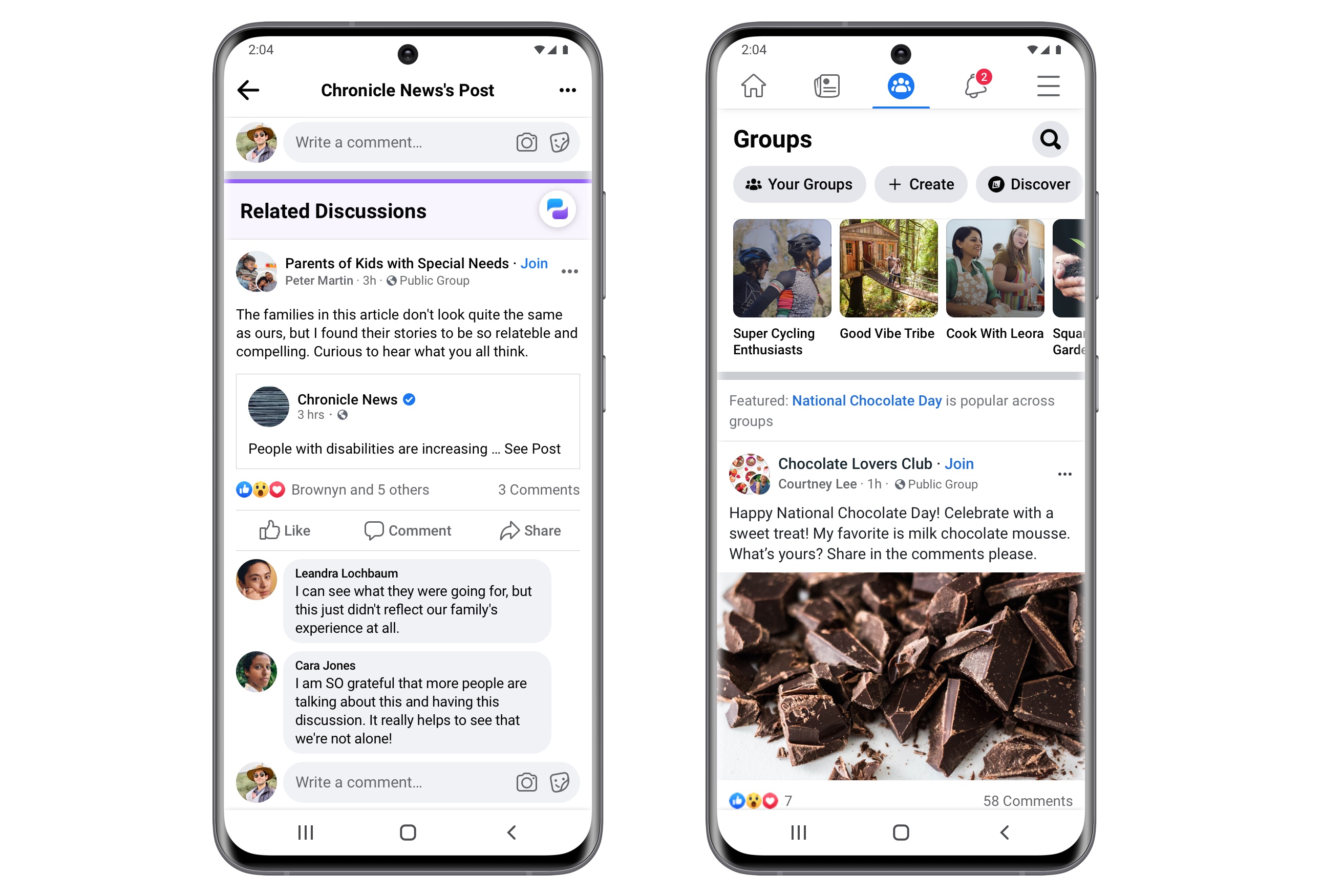

Recommended groups may also appear on the user tab if Facebook finds them important to people's interests.

In addition , public community posts will continue to surface in search results outside of Facebook, potentially giving them greater scope and a much wider audience. Taken together, these updates allow public groups to expand exponentially, which may backfire if terrorist individuals or societies propagate disinformation. Facebook claims any post deemed fake by a third party that has been reviewed would not be able to appear across these services.

Public organizations might get bogged down with trolls or individuals who don't care for the culture that the organization is seeking to promote when this relevant dialogue feature rolls out. Admins will be able to establish policies that do not encourage users who are not participants to post or enable them to be in a group for a certain period of time before uploading, and Facebook is helping moderators keep track of this future inflow of information.

It introduces a new function called Admin Assist that will allow mods to set rules and automatically implement them on Facebook. For example, certain keywords may be prohibited, or individuals who are new to the community will not be able to post for a certain amount of time, and instead of flagging these posts for administrators to accept or reject, Facebook will automatically manage them. For now, the types of constraint moderators that can be set are restricted, says Tom Alison, VP Engineering at Facebook. Moderators can not, for example , put out a guideline where there is no "rules" in a community that has become a contentious law over the past summer with the Black Lives Matter movement gaining traction in the West and around the world.

Over time, we're going to look for how to make this more nuanced and catch the wide behavior that admins might want to take, but right now, what we just concentrated on are some of the more popular stuff admins are doing and how we can simplify that, and we're going to add more stuff while we hear from the admin community, Alison said in an interview with The Verge.

It's hard to see how these latest additions can hold discussions active as people post links to political information. Related conversations might lead down a dark rabbit hole and expose people to the radical material and viewpoints of communities who they never intended to associate with and do not know are promoting disinformation or conspiracy theories.

Facebook has also said that it will continue to regulate the content of paramilitary groups and other organisations related to abuse, but the firm has struggled to identify the limits of offending content — including reports from a self-described paramilitary party in Kenosha, Wisconsin, where a 17-year-old militia member killed two people during a night of protests. The organization has also recently deactivated 200 accounts related to hate groups.

However, in addition to all these changes, the organization also says that it will give moderators an online course and test to help them learn how to "develop and maintain" their culture.

As with product functionality, Facebook introduces real-time conversations back to communities, and introduces Q&A sessions and a new post category called Prompts that allows users to send a picture in response to a prompt. These prompts would then become a slideshow. People will now be able to configure their profile for groups, which means they can set up a personalized profile picture or change what details they post on a group basis. (Someone in a dog lovers club may like to take a picture of themselves with their dog as a profile picture, for example.)

Moderators' positions are becoming more important to Facebook — they're the main content gatekeepers, so having them engaged and updated is the key to Facebook having groups right, particularly when groups start popping up around the web.