Technology

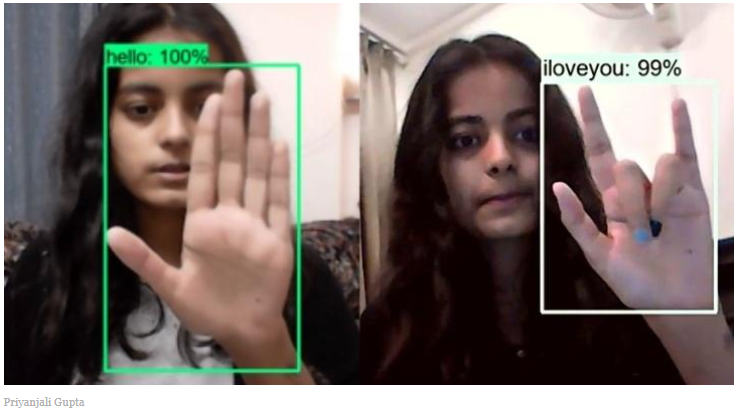

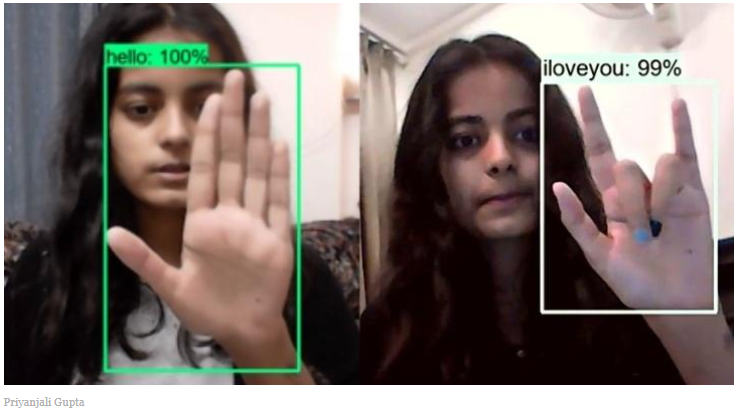

An engineering student creates an AI model that instantly translates sign language into English.

Communicating with someone who cannot speak or hear can be difficult, and despite the fact that ASL (American Sign Language) exists, few people learn to use it.

However, while perusing LinkedIn, I came across a post by Priyanjali Gupta, who had constructed an AI model that translated some ASL signals into English, thereby bridging the gap.

Gupta, a student at the famed Vellore Institute of Technology, was able to do this by utilizing the Tensorflow object detection API. It uses transfer-learning via a pre-trained model called ssd mobilenet at its core.

The dataset was created manually, according to her Github article, by running a software (called Image Collection python file in her GitHub repository) that captures photographs of ASL actions through a camera.

In a comment to one of the LinkedIn users, she has acknowledged to the fact that creating a deep learning model from scratch just for sign detection is quite complex, “To build a deep learning model solely for sign detection is a really hard problem but not impossible and currently I'm just an amateur student but I am learning and I believe sooner or later our open source community which is much more experienced and learned than me will find a solution and maybe we can have deep learning models solely for sign languages.”

She added, “Therefore for a small dataset and a small scale individual project I think object detection did just fine. It's just the idea of inclusion in our diverse society which can be implemented on a small scale.”

While this is still at a nascent stage, it’s commendable that developers are looking towards creating more inclusive applications to help those in need.